Disclaimer: I do not build database engines. I build web applications. I run 4-6 different projects every year, so I build a lot of web applications. I see apps with different requirements and different data storage needs. I’ve deployed most of the data stores you’ve heard about, and a few that you probably haven’t.

I’ve picked the wrong one a few times. This is a story about one of those times — why we picked it originally, how we discovered it was wrong, and how we recovered. It all happened on an open source project called Diaspora.

The project

Diaspora is a distributed social network with a long history. Waaaaay back in early 2010, four undergraduates from New York University made a Kickstarter video asking for $10,000 to spend the summer building a distributed alternative to Facebook. They sent it out to friends and family, and hoped for the best.

But they hit a nerve. There had just been another Facebook privacy scandal, and when the dust settled on their Kickstarter, they had raised over $200,000 from 6400 different people for a software project that didn’t yet have a single line of code written.

Diaspora was the first Kickstarter project to vastly overrun its goal. As a result, they got written up in the New York Times – which turned into a bit of a scandal, because the chalkboard in the backdrop of the team photo had a dirty joke written on it, and no one noticed until it was actually printed. In the NEW YORK TIMES. The fallout from that was actually how I first heard about the project.

As a result of their Kickstarter success, the guys left school and came out to San Francisco to start writing code. They ended up in my office. I was working at Pivotal Labs at the time, and one of the guys’ older brothers also worked there, so Pivotal offered them free desk space, internet, and, of course, access to the beer fridge. I worked with official clients during the day, then hung out with them after work and contributed code on weekends.

They ended up staying at Pivotal for more than two years. By the end of that first summer, though, they already had a minimal but working (for some definition) implementation of a distributed social network built in Ruby on Rails and backed by MongoDB.

That’s a lot of buzzwords. Let’s break it down.

“Distributed social network”

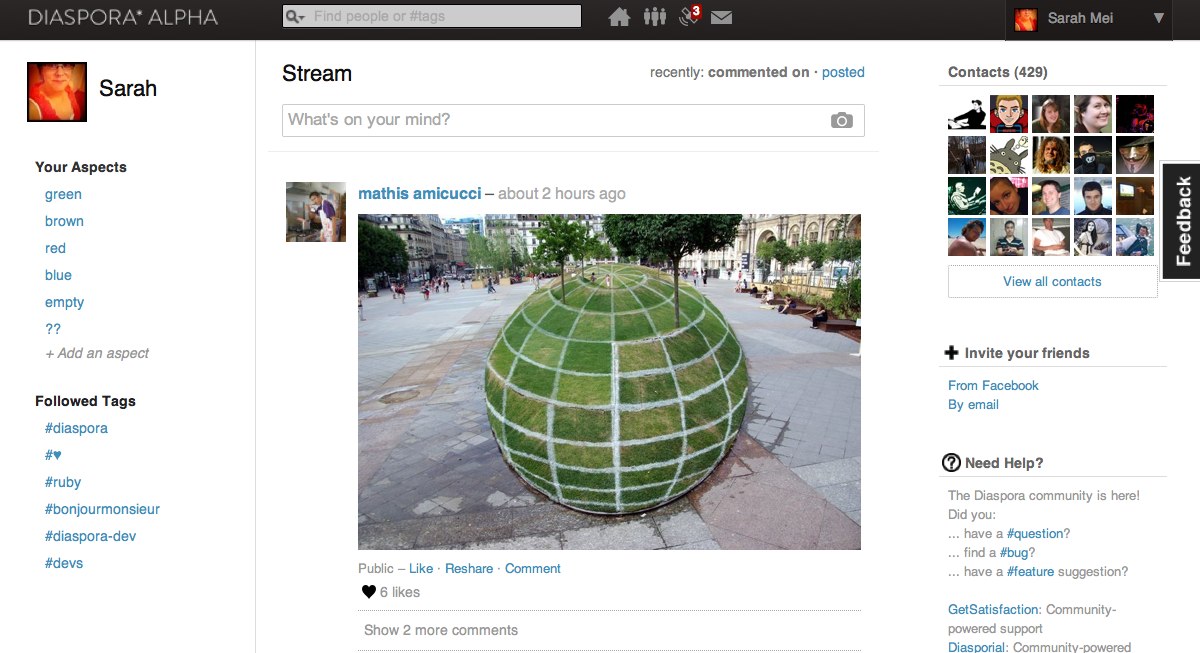

If you’ve seen the Social Network, you know everything you need to know about Facebook. It’s a web app, it runs on a single logical server, and it lets you stay in touch with people. Once you log in, Diaspora’s interface looks structurally similar to Facebook’s:

A screenshot of the Diaspora user interface

There’s a feed in the middle showing all your friends’ posts, and some other random stuff along the sides that no one has ever looked at. The main technical difference between Diaspora and Facebook is invisible to end users: it’s the “distributed” part.

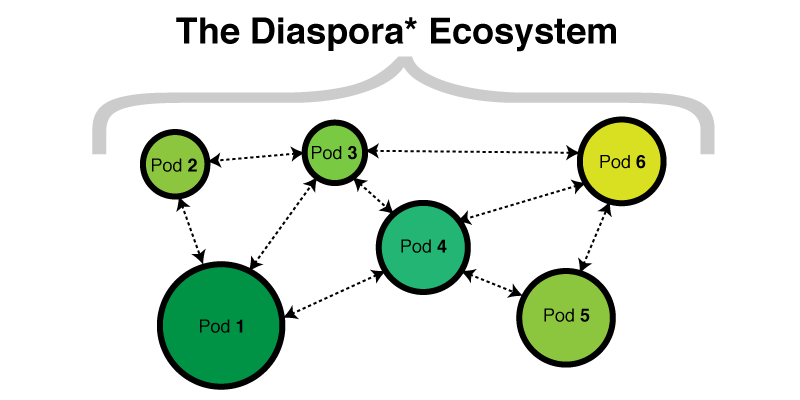

The Diaspora infrastructure is not located behind a single web address. There are hundreds of independent Diaspora servers. The code is open source, so if you want to, you can stand up your own server. Each server, called a pod, has its own database and its own set of users, and will interoperate with all the other Diaspora pods that each have their own database and set of users.

Pods of different sizes communicate with each other, without a central hub.

Each pod communicates with the others through an HTTP-based API. Once you set up an account on a pod, it’ll be pretty boring until you follow some other people. You can follow other users on your pod, and you can also follow people who are users on other pods. When someone you follow on another pod posts an update, here’s what happens:

1. The update goes into the author’s pod’s database.

2. Your pod is notified over the API.

3. The update is saved in your pod’s database.

4. You look at your activity feed and see that post mixed in with posts from the other people you follow.

Comments work the same way. On any single post, some comments might be from people on the same pod as the post’s author, and some might be from people on other pods. Everyone who has permission to see the post sees all the comments, just as you would expect if everyone were on a single logical server.

Who cares?

There are technical and legal advantages to this architecture. The main technical advantage is fault tolerance.

Here is a very important fault tolerant system that every office should have.

If any one of the pods goes down, it doesn’t bring the others down. The system survives, and even expects, network partitioning. There are some interesting political implications to that — for example, if you’re in a country that shuts down outgoing internet to prevent access to Facebook and Twitter, your pod running locally still connects you to other people within your country, even though nothing outside is accessible.

The main legal advantage is server independence. Each pod is a legally separate entity, governed by the laws of wherever it’s set up. Each pod also sets their own terms of service. On most of them, you can post content without giving up your rights to it, unlike on Facebook. Diaspora is free software both in the “gratis” and the “libre” sense of the term, and most of the people who run pods care deeply about that sort of thing.

So that’s the architecture of the system. Let’s look at the architecture within a single pod.

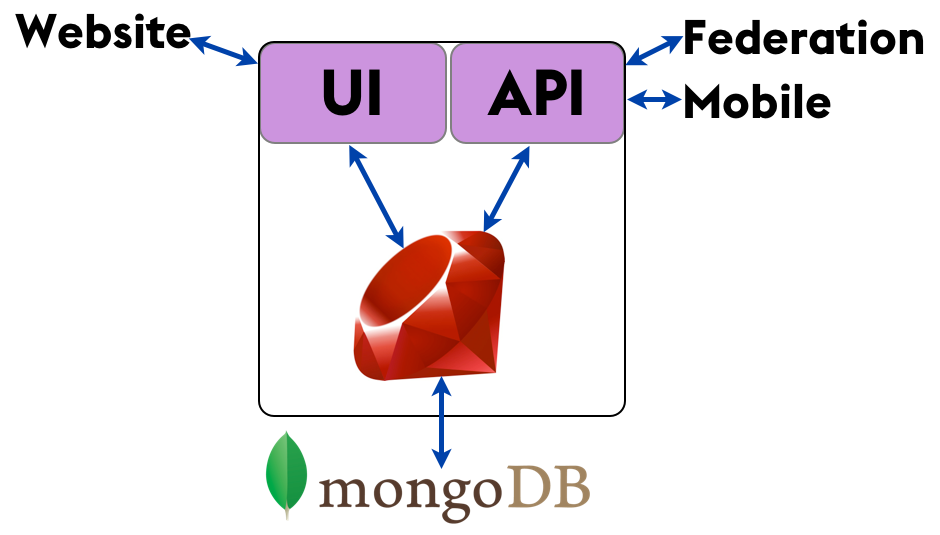

It’s a Rails app.

Each pod is a Ruby on Rails web application backed by a database, originally MongoDB. In some ways the codebase is a ‘typical’ Rails app — it has both a visual and programmatic UI, some Ruby code, and a database. But in other ways it is anything but typical.

The internal structure of one Diaspora pod

The visual UI is of course how website users interact with Diaspora. The API is used by various Diaspora mobile clients — that part’s pretty typical — but it’s also used for “federation,” which is the technical name for inter-pod communication. (I asked where the Romulans’ access point was once, and got a bunch of blank looks. Sigh.) So the distributed nature of the system adds layers to the codebase that aren’t present in a typical app.

And of course, MongoDB is an atypical choice for data storage. The vast majority of Rails applications are backed by PostgreSQL or (less often these days) MySQL.

So that’s the code. Let’s consider what kind of data we’re storing.

I Do Not Think That Word Means What You Think That Means

“Social data” is information about our network of friends, their friends, and their activity. Conceptually, we do think about it as a network — an undirected graph in which we are in the center, and our friends radiate out around us.

Photos all from rubyfriends.com. Thanks Matt Rogers, Steve Klabnik, Nell Shamrell, Katrina Owen, Sam Livingston-Grey, Josh Susser, Akshay Khole, Pradyumna Dandwate, and Hephzibah Watharkar for contributing to #rubyfriends!

When we store social data, we’re storing that graph topology, as well as the activity that moves along those edges.

For quite a few years now, the received wisdom has been that social data is not relational, and that if you store it in a relational database, you’re doing it wrong.

But what are the alternatives? Some folks say graph databases are more natural, but I’m not going to cover those here, since graph databases are too niche to be put into production. Other folks say that document databases are perfect for social data, and those are mainstream enough to actually be used. So let’s look at why people think social data fits more naturally in MongoDB than in PostgreSQL.

How MongoDB Stores Data

MongoDB is a document-oriented database. Instead of storing your data in tables made out of individual rows, like a relational database does, it stores your data in collections made out of individual documents. In MongoDB, a document is a big JSON blob with no particular format or schema.

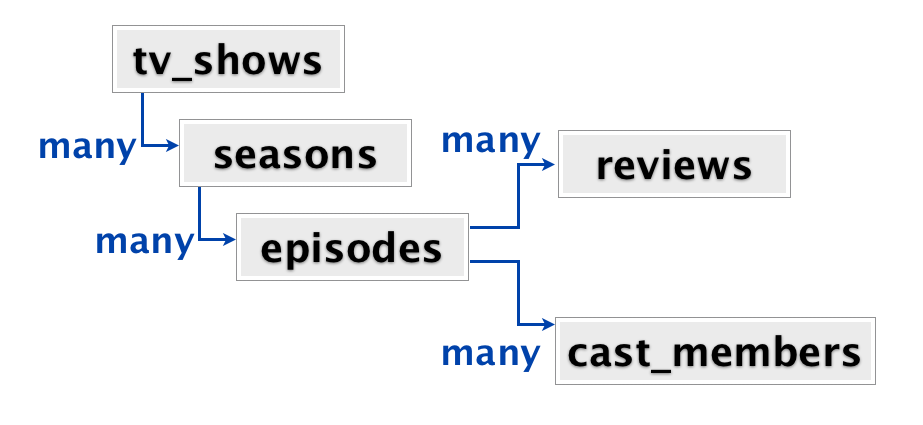

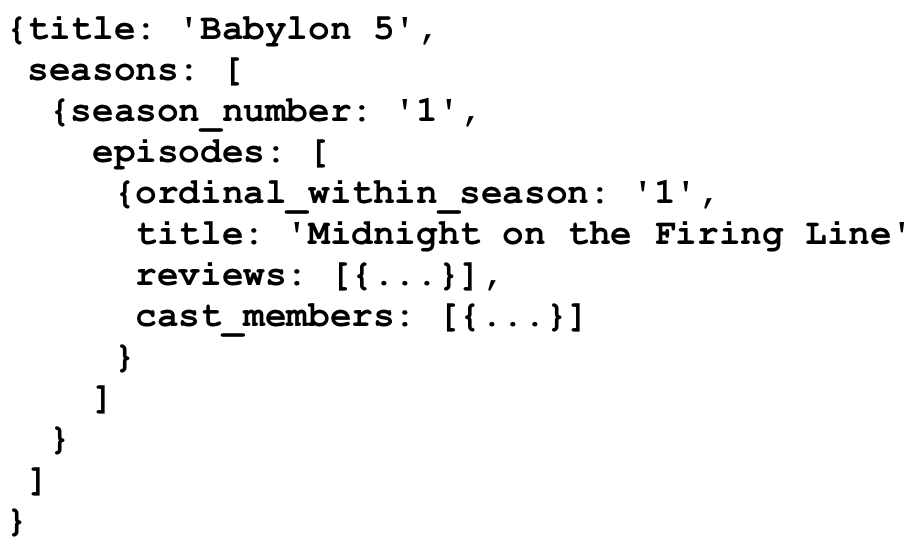

Let’s say you have a set of relationships like this that you need to model. This is quite similar to a project that come through Pivotal that used MongoDB, and was the best use case I’ve ever seen for a document database.

At the root, we have a set of TV shows. Each show has many seasons, each season has many episodes, and each episode has many reviews and many cast members. When users come into this site, typically they go directly to the page for a particular TV show. On that page they see all the seasons and all the episodes and all the reviews and all the cast members from that show, all on one page. So from the application perspective, when the user visits a page, we want to retrieve all of the information connected to that TV show.

There are a number of ways you could model this data. In a typical relational store, each of these boxes would be a table. You’d have a tv_shows table, a seasons table with a foreign key into tv_shows, an episodes table with a foreign key into seasons, and reviews and cast_members tables with foreign keys into episodes. So to get all the information for a TV show, you’re looking at a five-table join.

We could also model this data as a set of nested hashes. The set of information about a particular TV show is one big nested key/value data structure. Inside a TV show, there’s an array of seasons, each of which is also a hash. Within each season, an array of episodes, each of which is a hash, and so on. This is how MongoDB models the data. Each TV show is a document that contains all the information we need for one show.

Here’s an example document for one TV show, Babylon 5.

It’s got some title metadata, and then it’s got an array of seasons. Each season is itself a hash with metadata and an array of episodes. In turn, each episode has some metadata and arrays for both reviews and cast members.

It’s basically a huge fractal data structure.

Sets of sets of sets of sets. Tasty fractals.

All of the data we need for a TV show is under one document, so it’s very fast to retrieve all this information at once, even if the document is very large. There’s a TV show here in the US called “General Hospital” that has aired over 12,000 episodes over the course of 50+ seasons. On my laptop, PostgreSQL takes about a minute to get denormalized data for 12,000 episodes, while retrieval of the equivalent document by ID in MongoDB takes a fraction of a second.

So in many ways, this application presented the ideal use case for a document store.

Ok. But what about social data?

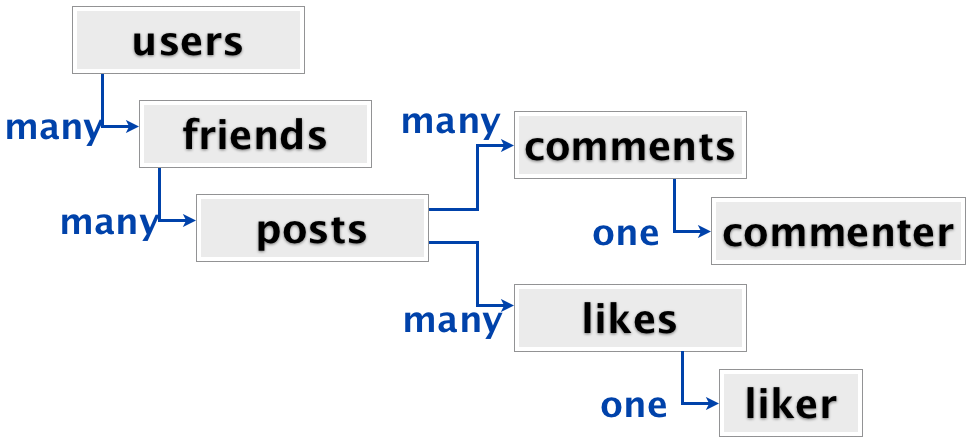

Right. When you come to a social networking site, there’s only one important part of the page: your activity stream. The activity stream query gets all of the posts from the people you follow, ordered by most recent. Each of those posts have nested information within them, such as photos, likes, reshares, and comments.

The nested structure of activity stream data looks very similar to what we were looking at with the TV shows.

Users have friends, friends have posts, posts have comments and likes, each comment has one commenter and each like has one liker. Relationship-wise, it’s not a whole lot more complicated than TV shows. And just like with TV shows, we want to pull all this data at once, right after the user logs in. Furthermore, in a relational store, with the data fully normalized, it would be a seven-table join to get everything out.

Seven-table joins. Ugh. Suddenly storing each user’s activity stream as one big denormalized nested data structure, rather than doing that join every time, seems pretty attractive.

In 2010, when the Diaspora team was making this decision, Etsy’s articles about using document stores were quite influential, although they’ve since publicly moved away from MongoDB for data storage. Likewise, at the time, Facebook’s Cassandra was also stirring up a lot of conversation about leaving relational databases. Diaspora chose MongoDB for their social data in this zeitgeist. It was not an unreasonable choice at the time, given the information they had.

What could possibly go wrong?

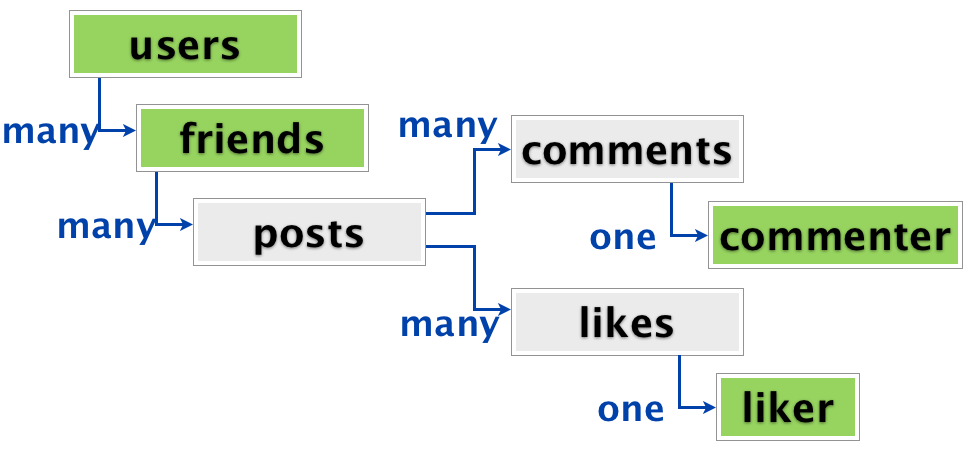

There is a really important difference between Diaspora’s social data and the Mongo-ideal TV show data that no one noticed at first.

With TV shows, each box in the relationship diagram is a different type. TV shows are different from seasons are different from episodes are different from reviews are different from cast members. None of them is even a sub-type of another type.

But with social data, some of the boxes in the relationship diagram are the same type. In fact, all of these green boxes are the same type — they are all Diaspora users.

A user has friends, and each friend may themselves be a user. Or, they may not, because it’s a distributed system. (That’s a whole layer of complexity that I’m just skipping for today.) In the same way, commenters and likers may also be users.

This type duplication makes it way harder to denormalize an activity stream into a single document. That’s because in different places in your document, you may be referring to the same concept — in this case, the same user. The user who liked that post in your activity stream may also be the user who commented on a different post.

Duplicate data Duplicate data

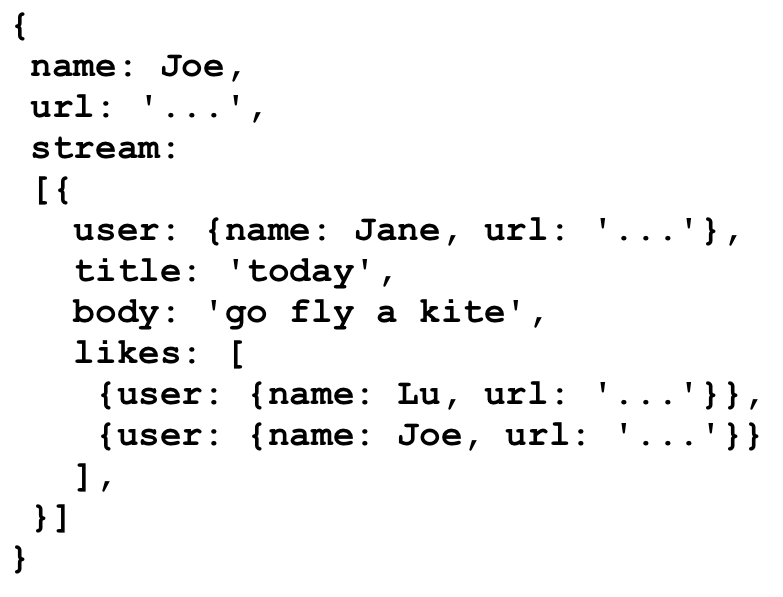

We can represent this in MongoDB in a couple of different ways. Duplication is any easy option. All the information for that friend is copied and saved to the like on the first post, and then a separate copy is saved to the comment on the second post. The advantage is that all the data is present everywhere you need it, and you can still pull the whole activity stream back as a single document.

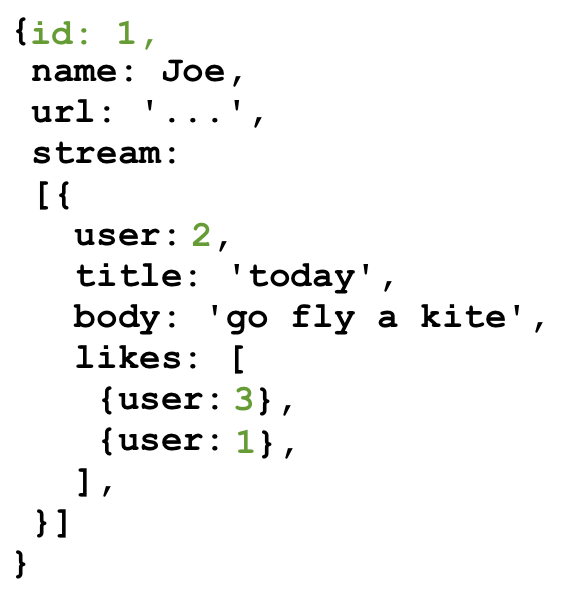

Here’s what this kind of fully denormalized stream document looks like.

Here we have copies of user data inlined. This is Joe’s stream, and it has a copy of his user data, including his name and URL, at the top level. His stream, just underneath, contains Jane’s post. Joe has liked Jane’s post, so under likes for Jane’s post, we have a separate copy of Joe’s data.

You can see why this is attractive: all the data you need is already located where you need it.

You can also see why this is dangerous. Updating a user’s data means walking through all the activity streams that they appear in to change the data in all those different places. This is very error-prone, and often leads to inconsistent data and mysterious errors, particularly when dealing with deletions.

Is there no hope?

There is another approach you can take to this problem in MongoDB, which will more familiar if you have a relational background. Instead of duplicating user data, you can store references to users in the activity stream documents.

With this approach, instead of inlining this user data wherever you need it, you give each user an ID. Once users have IDs, we store the user’s ID every place that we were previously inlining data. New IDs are in green below.

MongoDB actually uses BSON IDs, which are strings sort of like GUIDs, but to make these samples easier to read I’m just using integers.

This eliminates our duplication problem. When user data changes, there’s only one document that gets rewritten. However, we’ve created a new problem for ourselves. Because we’ve moved some data out of the activity streams, we can no longer construct an activity stream from a single document. This is less efficient and more complex. Constructing an activity stream now requires us to 1) retrieve the stream document, and then 2) retrieve all the user documents to fill in names and avatars.

What’s missing from MongoDB is a SQL-style join operation, which is the ability to write one query that mashes together the activity stream and all the users that the stream references. Because MongoDB doesn’t have this ability, you end up manually doing that mashup in your application code, instead.

Simple Denormalized Data

Let’s return to TV shows for a second. The set of relationships for a TV show don’t have a lot of complexity. Because all the boxes in the relationship diagram are different entities, the entire query can be denormalized into one document with no duplication and no references. In this document database, there are no links between documents. It requires no joins.

On a social network, however, nothing is that self-contained. Any time you see something that looks like a name or a picture, you expect to be able to click on it and go see that user, their profile, and their posts. A TV show application doesn’t work that way. If you’re on season 1 episode 1 of Babylon 5, you don’t expect to be able to click through to season 1 episode 1 of General Hospital.

Don’t. Link. The. Documents.

Once we started doing ugly MongoDB joins manually in the Diaspora code, we knew it was the first sign of trouble. It was a sign that our data was actually relational, that there was value to that structure, and that we were going against the basic concept of a document data store.

Whether you’re duplicating critical data (ugh), or using references and doing joins in your application code (double ugh), when you have links between documents, you’ve outgrown MongoDB. When the MongoDB folks say “documents,” in many ways, they mean things you can print out on a piece of paper and hold. A document may have internal structure — headings and subheadings and paragraphs and footers — but it doesn’t link to other documents. It’s a self-contained piece of semi-structured data.

If your data looks like that, you’ve got documents. Congratulations! It’s a good use case for Mongo. But if there’s value in the links between documents, then you don’t actually have documents. MongoDB is not the right solution for you. It’s certainly not the right solution for social data, where links between documents are actually the most critical data in the system.

So social data isn’t document-oriented. Does that mean it’s actually…relational?

That Word Again

When people say “social data isn’t relational,” that’s not actually what they mean. They mean one of these two things:

1. “Conceptually, social data is more of a graph than a set of tables.”

This is absolutely true. But there are actually very few concepts in the world that are naturally modeled as normalized tables. We use that structure because it’s efficient, because it avoids duplication, and because when it does get slow, we know how to fix it.

2. “It’s faster to get all the data from a social query when it’s denormalized into a single document.”

This is also absolutely true. When your social data is in a relational store, you need a many-table join to extract the activity stream for a particular user, and that gets slow as your tables get bigger. However, we have a well-understood solution to this problem. It’s called caching.

At the All Your Base Conf in Oxford earlier this year, where I gave the talk version of this post, Neha Narula had a great talk about caching that I recommend you watch once it’s posted. In any case, caching in front of a normalized data store is a complex but well-understood problem. I’ve seen projects cache denormalized activity stream data into a document database like MongoDB, which makes retrieving that data much faster. The only problem they have then is cache invalidation.

“There are only two hard problems in computer science: cache invalidation and naming things.”

Phil Karlton

It turns out cache invalidation is actually pretty hard. Phil Karlton wrote most of SSL version 3, X11, and OpenGL, so he knows a thing or two about computer science.

Cache Invalidation As A Service

But what is cache invalidation, and why is it so hard?

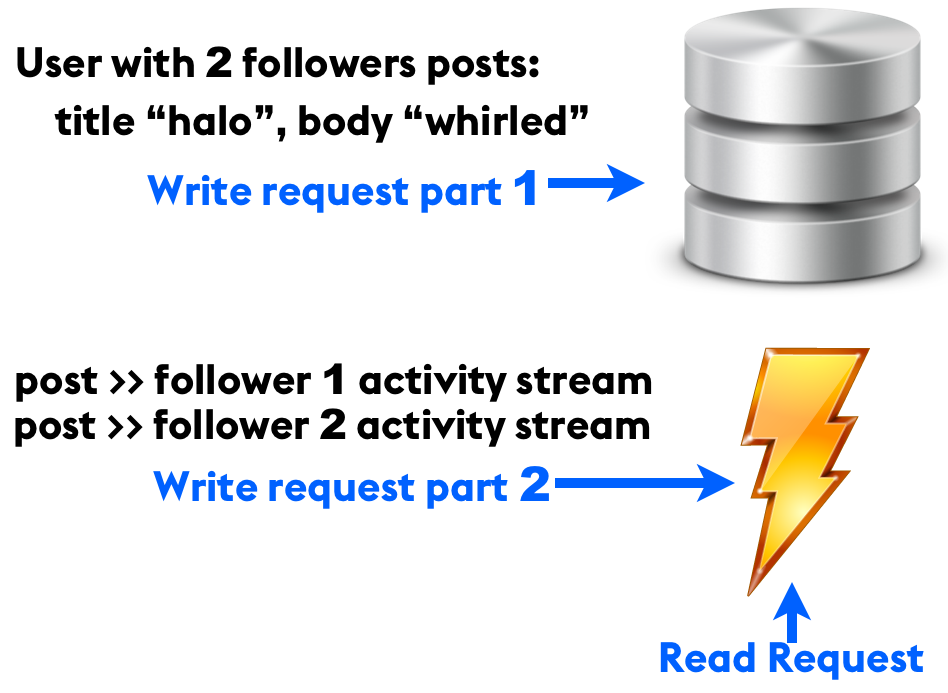

Cache invalidation is just knowing when a piece of your cached data is out of date, and needs to be updated or replaced. Here’s a typical example that I see every day in web applications. We have a backing store, typically PostgreSQL or MySQL, and then in front of that we have a caching layer, typically Memcached or Redis. Requests to read a user’s activity stream go to the cache rather than the database directly, which makes them very fast.

Typical cache and backing store setup

Application writes are more complicated. Let’s say a user with two followers writes a new post. The first thing that happens (part 1) is that the post data is copied into the backing store. Once that completes, a background job (part 2) appends that post to the cached activity stream of both of the users who follow the author.

This pattern is quite common. Twitter holds recently-active users’ activity streams in an in-memory cache, which they append to when someone they follow posts something. Even smaller applications that employ some kind of activity stream will typically end up here (see: seven-table join).

Back to our example. When the author changes an existing post, the update process is essentially the same as for a create, except instead of appending to the cache, it updates an item that’s already there.

What happens if that step 2 background job fails partway through? Machines get rebooted, network cables get unplugged, applications restart. Instability is the only constant in our line of work. When that happens, you’ll end up with invalid data in your cache. Some copies of the post will have the old title, and some copies will have the new title. That’s a hard problem, but with a cache, there’s always the nuclear option.

Always an option >_<

You can always delete the entire activity stream record out of your cache and regenerate it from your consistent backing store. It may be slow, but at least it’s possible.

What if there is no backing store? What if you skip step 1? What if the cache is all you have?

When MongoDB is all you have, it’s a cache with no backing store behind it. It will become inconsistent. Not eventually consistent — just plain, flat-out inconsistent, for all time. At that point, you have no options. Not even a nuclear one. You have no way to regenerate the data in a consistent state.

When Diaspora decided to store social data in MongoDB, we were conflating a database with a cache. Databases and caches are very different things. They have very different ideas about permanence, transience, duplication, references, data integrity, and speed.

The Conversion

Once we figured out that we had accidentally chosen a cache for our database, what did we do about it?

Well, that’s the million dollar question. But I’ve already answered the billion-dollar question. In this post I’ve talked about how we used MongoDB vs. how it was designed to be used. I’ve talked about it as though all that information were obvious, and the Diaspora team just failed to research adequately before choosing.

But this stuff wasn’t obvious at all. The MongoDB docs tell you what it’s good at, without emphasizing what it’s not good at. That’s natural. All projects do that. But as a result, it took us about six months, a lot of user complaints, and a lot of investigation to figure out that we were using MongoDB the wrong way.

There was nothing to do but take the data out of MongoDB and move it to a relational store, dealing as best we could with the inconsistent data we uncovered along the way. The data conversion itself — export from MongoDB, import to MySQL — was straightforward. For the mechanical details, you can see my slides from All Your Base Conf 2013.

The Damage

We had eight months of production data, which turned into about 1.2 million rows in MySQL. We spent four pair-weeks developing the code for the conversion, and when we pulled the trigger, the main site had about two hours of downtime. That was more than acceptable for a project that was in pre-alpha. We could have reduced that downtime more, but we had budgeted for eight hours of downtime, so two actually seemed fantastic.

NOT BAD

Epilogue

Remember that TV show application? It was the perfect use case for MongoDB. Each show was one document, perfectly self-contained. No references to anything, no duplication, and no way for the data to become inconsistent.

About three months into development, it was still humming along nicely on MongoDB. One Monday, at the weekly planning meeting, the client told us about a new feature that one of their investors wanted: when they were looking at the actors in an episode of a show, they wanted to be able to click on an actor’s name and see that person’s entire television career. They wanted a chronological listing of all of the episodes of all the different shows that actor had ever been in.

We stored each show as a document in MongoDB containing all of its nested information, including cast members. If the same actor appeared in two different episodes, even of the same show, their information was stored in both places. We had no way to tell, aside from comparing the names, whether they were the same person. So to implement this feature, we needed to search through every document to find and de-duplicate instances of the actor that the user clicked on. Ugh. At a minimum, we needed to de-dup them once, and then maintain an external index of actor information, which would have the same invalidation issues as any other cache.

You See Where This Is Going

The client expected this feature to be trivial. If the data had been in a relational store, it would have been. As it was, we first tried to convince the PM they didn’t need it. After that failed, we offered some cheaper alternatives, such as linking to an IMDB search for the actor’s name. The company made money from advertising, though, so they wanted users to stay on their site rather than going off to IMDB.

This feature request eventually prompted the project’s conversion to PostgreSQL. After a lot more conversation with the client, we realized that the business saw lots of value in linking TV shows together. They envisioned seeing other shows a particular director had been involved with, and episodes of other shows that were released the same week this one was, among other things.

This was ultimately a communication problem rather than a technical problem. If these conversations had happened sooner, if we had taken the time to really understand how the client saw the data and what they wanted to do with it, we probably would have done the conversion earlier, when there was less data, and it was easier.

Always Be Learning

I learned something from that experience: MongoDB’s ideal use case is even narrower than our television data. The only thing it’s good at is storing arbitrary pieces of JSON. “Arbitrary,” in this context, means that you don’t care at all what’s inside that JSON. You don’t even look. There is no schema, not even an implicit schema, as there was in our TV show data. Each document is just a blob whose interior you make absolutely no assumptions about.

At RubyConf this weekend, I ran into Conrad Irwin, who suggested this use case. He’s used MongoDB to store arbitrary bits of JSON that come from customers through an API. That’s reasonable. The CAP theorem doesn’t matter when your data is meaningless. But in interesting applications, your data isn’t meaningless.

I’ve heard many people talk about dropping MongoDB in to their web application as a replacement for MySQL or PostgreSQL. There are no circumstances under which that is a good idea. Schema flexibility sounds like a great idea, but the only time it’s actually useful is when the structure of your data has no value. If you have an implicit schema — meaning, if there are things you are expecting in that JSON — then MongoDB is the wrong choice. I suggest taking a look at PostgreSQL’s hstore (now apparently faster than MongoDB anyway), and learning how to make schema changes. They really aren’t that hard, even in large tables.

Find The Value

When you’re picking a data store, the most important thing to understand is where in your data — and where in its connections — the business value lies. If you don’t know yet, which is perfectly reasonable, then choose something that won’t paint you into a corner. Pushing arbitrary JSON into your database sounds flexible, but true flexibility is easily adding the features your business needs.

Make the valuable things easy.

The End.

Thanks for reading! Let me sum up how I feel about comments on this post:

Came here from HN. Great article! Really well-written, great examples, and easy to follow. You’ve convinced me to stay relational!

Really interesting read, made all the more enjoyable by references to Babylon 5! Thanks!

This article was a great read. I’ve been contemplating using MongoDB for a nodejs project, but you’ve put the nail in the coffin to that idea. Thank you. You’ve saved me countless hours of work.

Thanks! This is an excellent treatment of the ills of document stores.

It is nigh-impossible to beat the performance of postgres and oracle for any use-case beyond trivial and I think you definitely all out all the major points.

I’ve recently had some experience consulting with a team that doesn’t have much data (just a few hundred million rows) but they’ve exceeded the memory footprint of the machine several times. How do you guys deal with the size of your data?

The real challenge I see here is in sharding “social-relational” data that is fundamentally related in too many ways (forcing you to build giant indices, forcing you to buy more memory). Have you guys encountered this problem?

Thanks for writing this article. I found the first half to be very well researched, article, clever, and fun to read (as well as educational and entertaining!). The only part of the article that irked me was towards the latter half, when you suddenly make the claim that MongoDB is a cache, as a result of which, horrible things happened. I’ve read a bit about Mongo and agree completely, but if I didn’t have that context, I might have been a little confused, since previously Mongo was presented as an “Actual Real Database™” one might use in production.

Anyway, I’m really glad you spent the time gathering these thoughts and sharing them. Reading this has helped me think about how I approach storing data for a project — the goal shouldn’t be to solve the current problem and feel clever, but rather to understand how the project will need to use that data in the long term.

Good disussion of some of the issues with Mongo and relational. Wondering why all the comments are on ycombinator and not here

https://news.ycombinator.com/item?id=6712703

I did a somewhat related post on how one would design a schema for facebook type functionality

http://www.kylehailey.com/facebook-schema-and-peformance/

“Some folks say graph databases are more natural, but I’m not going to cover those here, since graph databases are too niche to be put into production.”

Are you sure? Have you told the BBC?

http://www.bbc.co.uk/blogs/internet/posts/Linked-Data-Connecting-together-the-BBCs-Online-Content

[WARNING: WAAAY OFF TOPIC]

Heya Sarah,

Just dropping by to say hi, and that you’re still missed over on D*. This isn’t any kind of attempt to convince you to do or change anything. Just to let you know you came up briefly in conversation, and I fondly remembered your posts. Hope you and the (probably no longer) little ‘uns are doing well. Thanks for giving me the invite to D* all those years ago.

Bugs

We chose MongoDB since it was the most popular (at least it seemed to be), and it had an aggregation framework. After 6 months into our social data application, we saw the pains of duplication. We had workers take care of most of these problems, but it was still too error prone and became very annoying when we needed to develop new features that had some kind of relation involved.

In hindsight, I think we should have used a relational database, and I still don’t see the benefits of using Mongo in our application today; Like this post says, Mongo has a very narrow use case and is ideal when you just want to dump stuff into it. It should really be thought of as a document folder on your operating system where there are all these different documents that you just dump in there and each file has no relation to each other, but it is easy to retrieve when you need it.

The fundamental failure here was not the database, but the perception that social data must be consistent. If a user changes his/her name, does it matter if others see this update right away or 1 minute/5 minutes/1 hour later?

How about if you stored all updates as activity stream (e.g. user Joe registered, user Joe posted a comment, etc) and built up other (fully denormalized) documents asynchronously? How about you made use of map reduce to resolve the userId links? Note that these solutions do not go against the document database way of doing things.

You’re stuck in relational mindset where things are fully consistent and try to apply this for document databases. Yes, it will fail. But document databases do not have anything to do with that.

The impression I get is that while there are quite a few use-cases where relational databases are not ideal, there are very few use-cases where they are less than adequate, and the alternatives tend to have a much narrower range of use-cases and much worse problems when used inappropriately

Very nice article. I am just confused about one thing though. In the TV example, isnt there a Data duplication for cast_members, if the cast members are more than mere names?

Thank you Sarah for this detailed write up!

Let this be painful remainder to other developers who may have brought into the hype of certain new technologies

I hope for their sake they don’t have to learn the hard way.

Well, back in the old days, all kind of databases were document oriented. And with reason the people had the idea to create relational databases, because they are helpful in 97% of all projects. But I know the time in 2010 when everyone was in love with MongoDB and CouchDB. Maybe in some time network-databases will be the next big thing and right after that there will be a time again for document-based datbases and so on and so on. You should always be sceptical when someone says “well, just take technique xyz and all works perfectly”.

But nice article anyway! Thanks.

Nice post, thank you!

Have you ever heard about ?.

With events even if you came to inconsistent state you can fix a problem, replay events and become consistent again.

I’ve seen many mongodb applications which is run into similar problems, because they did a lot of denormalization and the data become inconsistent. That’s the problem.

But if you have a “single source of truth” it can be relational database or events store with mongodb on top of it — you are just fine.

I’m curious why you say that graph databases are not ready for production. Neo4J seems to be doing fine.

If you could elaborate on the “since graph databases are too niche to be put into production” argument, I’d be happy.

Reading this article I was constantly reminded of this video,

Video Link

At the same time I was reminded of something a previous boss taught me. “New technology doesn’t solve all problems. It merely changes what problems you have to solve.”

Isn’t the title here a bit misleading and should rather be “Why you should not use the current buzzword for your next product, before you actually know your technical requirements”?

I do use MongoDB on a few different applications in production since years – never having any trouble like its so often written about. And i have going Terabytes of data flying through it every few months.

As the author stated itself, it’s a question of your actual data matching what MongoDB does – for relational data it quickly does not. The problem is that it seems to support relational data just fine when you start out a project. Doing manual joins for a hand full of collections and with little data is easily done in ruby.

But then suddenly the product you are building is having a little bit of success and the bad database design is flying around your ears – Just as it did not so long time ago when someone was abusing MySQL and co.

The biggest curse of MongoDB is that it seems just too good to be true at the beginning and does unfortunately lead to making age old mistakes in development again, because the fresh devs don’t know any better and assume its all taken care of by the cool new thing they read about in some blogs recently.

This is one of the best write-ups on the disadvantages of MongoDB. Great usage of examples with the TV Shows vs. Social data models. Thanks for putting this together.

I think the reason why there is so much confusion with MongoDB is the fact there isn’t a set of great use cases for its use. It should never be used as a replacement for a SQL engine, I’ve seen people try and this will almost always lead to failure. It’s a totally different model.

I think that, as Ayende Rahien (the creator or RavenDb) pointed it in a blog post, you just misunderstood the right way to use MongoDb, or any document oriented db, for that matter.

Of course, if you design your model to have ALL the related data included within a document, you’re going to hold all the data on earth (or at least in your db) inside of one single document!

I know that in a document db world, we try to have the maximum of all the data related to one concept (or area, view, etc.) in a document, to be able to make very few requests to display what you need in your view (as in the tv show example 1st version).

But you CAN have references in a document to others documents of the same nature (so you don’t duplicate your data), and use includes when you query your datastore.

Another solution maybe smarter, would be to have partial data for your referenced documents, with an id allowing you to load the details if needed:

{

id: ‘users/1’,

name: ‘Jack’,

url: ‘…’,

/* a lot of other user related data */

stream: [{

user: { id: ‘users/2’ name: ‘Kate’, url: ‘…’ /* here we don’t need to duplicate all the details data, just what we need to display when another user posts smth on your wall */ },

title: ‘…’,

body: ‘…’,

likes: [{ id: ‘users/3’ name: ‘John’, url: ‘…’ /* here we don’t need to duplicate all the details data, just what we need to display when another user likes smth on your wall */ },

{ id: ‘users/3’ /* Here you liked yourself the post, so we already have all the info about yourself, we don’t need to duplicate anything! */ }, …]

}]

}

Now, if this partial data needs to be updated whenever the referenced document changes, your second guess, using only ids to reference others documents would be the best, and you would still get very fast query time if you design map/reduces indexes efficiently. You would be relational just for this exact problem (user referencing users referencing users refe…) in a whole nosql design.

I think that, as Bredon thinks, your post is clearly provoking and anyone with a successful experience with a document oriented datastore would answer you that you criticize something that you were just not able to use properly.

This, unfortunately, looks like a classic case of “if you have a hammer, you treat everything like a nail.” You are clearly very familiar with relational databases, and relational querying, but have less experience with map-reduce operations, document-based indexing, graph databases and related techniques. The example you give as “insurmountable” in mongodb is a pretty straightforward map-reduce operation.

You also have to think about different consistency schemes – away from the traditional “transactionally consistent” approach of a db – which has all sorts of scaling issues – and towards an “eventually consistent” approach.

MongoDB is not a drop-in replacement for a relational database, nor would a graph database like Neo4J be. But they are both excellent solutions to particular types of data shaping, reading and writing problem.

You should use graph database instead of mongo, links change fast & often.

So A like B is a link, B comment C is a link.

There are full of links in a social network.

For example, neo4j, titan (cassandra backend), or others.

Graph database may not well-know and mature at the time dispora started.

Another way is build a graph interface by your self.

And you can choose riak, cassandra, or others for high availability, and redis for fast analyze.

I could not see why you state this based on your example above. MongoDB is made for storing and querying semi-structured data, like documents that have only some fields common, and that’s what it’s good for. In that case the data is not without value, it’s a logical piece of data that simply has a variable schema and you need to design your application around that fact.

Clearly non-relational DB’s have problems when your data is in fact relational in some level. Like in the example, schemaless structure gets complicated and leads to design problems when you need to reference other documents. Then your data seems relational, and you probably need RDBMS.

One thing I’ve learned: the data will outlive the application. That’s the main reason why you want it to be organized independently of how it happens to be useful for the first application. In other words, you want it normalized (as you discovered) because at some point someone will want to query /update it in a totally different way than you’ve organized it initially.

Relational databases were invented specifically to fix the problem caused by the previously-existing hierarchical databases; I don’t know why people think going back to those is in any way an improvement.

Great piece. Very clear on the most fundamental problem with MongoDB. (There are other problems with it that I know only by reputation, … so I guess there’s no need to go into them.) Sadly, a lot of people will hear “MongoDB is a bad key-value store”, not “key-value stores in place of databases are bad”. And of course, a lot of people will simply refuse to hear anything at all. But, kudos for writing this.

Nice explanation. I am going to admit I have faced same problem with redis. I’ve build a data structure and created “fake” FK between the models but is not flexible at all and having loads of issues to modify it. It was really funny to use redis but now looking to the issues I am facing I know I screwed up Although I really believe that for live counters, score boards etc it’s just perfect. But not to store normalized data.

Although I really believe that for live counters, score boards etc it’s just perfect. But not to store normalized data.

Seems to me that a mixed approach might be helpful. A document store database would be good at storing documents, both the contents and attributes. Then use a relational database to store and manipulate the relationships.

I know that a lot of folks just choked. Actually using two different technologies in the same project just sends some into freakdom. Still using something the way that it was intended and for what it was intended seems natural to me.

I hope you don’t change your mind when you reach billions of records.

There are too many flaws in your reasoning.

The real title should have been “Why I was unable to use a document store” (I understand bashing MongoDB helps you get more clicks).

Your “all you have with mongodb is a cache” argument is false. “write request #1” = data, “write request #2” = cache.

You can always rebuild activity streams with post data, whatever system you use for storing posts (MongoDB, MySQL, …), and activity stream (MongoDB, MySQL, Redis, …).

The only drawback of most NoSQL databases is the lack of transactions. Some apps have a strong need for transactions (=> not a good idea to use NoSQL), some don’t (=> NoSQL might be usable).

I really think the TV app show belongs to the “transactions are not a requirement” category. All you need is ti split your documents (show actor).

“Why You Should Never Say Never”:

Embrace the redundancy and built your application accordingly. Once you rely on joins you have a serious scalability issue, especially on projects that aspire to have a huge userbase. Accept eventual consistency as sufficient. The second your neatly joined, consistent data comes back it’s stale anyway.

Who cares if a user who just changed his name appears with his old name for a few more seconds on some other part of the site while the update job enters a queue and will eventually straighten things out.

RDBMS make writing consistent data their top priority and sacrifice read performance to do that. Still 90% of the time you wanna just read from your database, so that should be your main concern, if you can guarantee that the data will be eventually consistent. Once you can do that, NoSQL like MongoDB starts looking pretty good again.

Amazing post.

Have I read this 2 years ago I would have thought twice before writing a huge Facebook game with lots of social layers and join-oriented data analysis in Mongo. We chose this product only because it was cool at the time, and our PM thought it was a good call because it enabled us to cut pre-alpha development time to half (and show results to our investors). Little did we know that half a year later we’ll face terrible performance issues because we had to select ALL our information for basic operations, and face terrible persistency bugs originating from inconsistent duplicate information. When we tried to add cache to our code – it all went to hell. The game, and my job, were done for.

Well, MongoDB has more issues than just a simple use case mismatch like you explained. Real world data storage problems are 99% relational, the question is, could you make that appear as non-relational, or have absolutely minimal references between types. If so, MongoDB would be still viable, if it was a real distributed system and not like a simple master-slave replication solution what you can get for MySQL too.

Excellent, thought-provoking article. Thank you for sharing this valuable experience with the community. I’m a little surprised by your swift dismissal of graph DBs especially for a social networking site. I know they are not widely adopted, but I would have thought it’s such a natural fit that it would have received more consideration. I believe they have the potential to become the answer to the scaling issues with relational stores without forfeiting normalization. I have only tinkered with them thus far, so perhaps there are some fundemtal trade offs with that approach which I don’t understand.

This is false. There is a particular format and schema, it is JSON. You cannot insert invalid JSON. You cannot insert BSON. You cannot insert XML, and so on and so forth. NoSql JSON databases have schema. It is just a very loose / nonrigid schema which is highly beneficial.

This is patently false. There is always schema. The schema is the data itself. There is always the idiom garbage in garbage out. If you have nothing at all to have any kind of schema and just allow random json in, yes you have garbage in garbage out. But let’s step back, for the most part you’re going to be doing this with some kind of programming language even if it’s javascript. You will have types/classes that define schema.

Having a loose schema database is absolutely fantastic for applications. You want to store more fields, new kinds of data you just enrich your models and then cope with previous data that does not have the new points. You could either not care, or you could update existing documents to have something.

The only downside to a loose schema database is you can inadvertently lose data. The easiest scenario is renaming a field. You change Foo to Bar, now your entire database will have nothing in Bar, and the data Foo contains will slowly but surely be eaten away. You can work around this by updating all your documents to be consistent with your new application schema.

I don’t use MongoDB so I can’t get into your application design issues you encountered, I use RavenDB. The issues you encountered that made you leave MongoDB, could have been solved with the features RavenDB provides. RavenDB does allow you to join data.

Creating relationships in your software is one of the furthest reaching design decisions you make. This is why I loathe RDBMS, it makes the assumption that everything is a relationship which is wrong. RavenDB lets you choose very carefully. To answer some of your problems, I would denormalize copy userIds and probably postIds. These Ids would be immutable. Then use indexes to create the time lines to bridge the live data together. Or even possible avoid joining in the index, and rely on features of Raven that allow you to bring back documents at read time. Maybe i don’t really need Posts inside my index, but merely when i select top 50 items in my feed i need to show the content of those posts.

They say in psychology that one choice is a sign of psychosis, and having only two, a sign of neurosis. In both of these scenarios, the issue appears to be not that the data is relational (implying a functional relationship between co-variants) or document (as you rightly cite, being self-contained, non-hyperlinked, non-relational, unique unto themselves, which is to say virtual unicorns, mythical creatures in the modern world 😉

so what about structured data? how are we to represent structured “graph-like” data without dangerous replications?

what about object databases? existdb for example.

Incredible post. This referential data problem in MongoDB always confounded me and I wondered how these types of situations were handled (or not). This bothered me to the point that I almost chased down someone with a MongoDB backpack on in the airport to ask them :). I guess the paradigm is fast reads, slower writes. You mention graph databases briefly but I feel like if they have a place in any application, this would be it. When you say that graph databases are “too niche” are you saying that they are “too niche for Diaspora” or just for general use? I only have experience with one (Neo4J) and I’m certainly not an authority but it was extremely powerful and easy to use.

It is amazing how many people seem to be able to understand the power of MongoDB, and successfully use it in large scale production environments….

http://www.mongodb.org/about/production-deployments/

http://engineering.foursquare.com/2012/11/27/mongodb-at-foursquare-from-the-the-cloud-to-bare-metal/

(reasonable sized social media site!)

The real issue is the people here didn’t learn HOW TO USE MongoDB at the start of their project.

I had not recommended to use MySQL as an alternative in this case, instead I have preferred to take a look at a Graph database option (personal opinion and technical advice for that matter).

If you are reading this article looking for advise about “NoSQL” technologies I recommend you to watch this great presentation of Martin Fowler: https://www.youtube.com/watch?feature=player_embedded&v=qI_g07C_Q5I

Hey folks, thanks for the feedback. I won’t be approving any more comments that can be summed up as “you are sooooo dumb. fer reals.” In fact I may go delete some of the ones I did approve. :p I don’t think they add much to the conversation.

Re: graph databases, when I say they’re too niche, what I mean is that they’re too expensive, and/or they’re too difficult to deploy without a DBA or dedicated ops person. Big companies have both lots of money and lots of people, so of course they can do it, but big companies are boring. 😉 Graph databases just aren’t practical for startups and small companies doing their own ops.

Also any comment containing “No offense, but…” will be summarily deleted.

They don’t really care about duplication of data, disc space is cheap compared to processor power and memory which is what a RDMS needs. NoSQL is about fast database access not about ACID.

We have a large betting system working on and Oracle box, there is 16 (Itanium circa 2003) processors grinding with an oracle instance. The weak point is the CPU time.

This is a good article if you read between the lines and ignore the title “Why you should never use MongoDB”. This serves as a good lessons-learned article on document design. Document design is a completely different skill compared to relational schema design. Ask 10 relational DBAs to give you a schema design for an online book shop and they will all give you pretty much the same thing, ask 10 NoSQL programmers to design a schema and each will come back completely different. Good document design depends on the actual usage of that data rather than just the storage. Its also much newer, people aren’t as experienced and there aren’t as many article details how to do it well and common patterns. In both examples used here I think poor document schemas were used in the very beginning. I understand de-normalising and redundancy in document stores, but really? To this level? Actors continually duplicated like that especially when pages about actors seems a VERY logical progression and actor data is likely to change, what did you plan on doing if someone got married?

Storing relational data in a document store is not a bad thing. One collection for TV shows, one collection for actors. Easy. IDs can be stored to relate them, heck, MongoDB has conventions for this and some drivers offer support. http://docs.mongodb.org/manual/reference/database-references/ Document stores are designed to be incredibly quick, designed in such a way that where you would make one query in a relational database you now make many to retrieve many documents, it requires more effort at the application layer but couple any relational database with an ORM and you’ve got that anyway. This isn’t a bad thing, its just different.

This article also completely fails to mention exactly what was going on at the application tier. One retrieving the JSON document were each one of your ‘boxes’ in the diagram mapped to a Ruby class? If this is the case then the application effectively has a strongly relational model at the application tier and is shoe-horning JSON into it. This is NEVER going to work. You basically designed a fully relational database but are storing is as JSON. It’s just that the location of the schema is application level rather than database level.

A final thing that I would like to note (and I’m sorry if you’re friends of the guys that wrote the software) but they didn’t seem to be the greatest developers when they started a quick Google search for “Diaspora security flaws” returns many results, this one in particular is excellent – http://www.kalzumeus.com/2010/09/22/security-lessons-learned-from-the-diaspora-launch/. Some of the things listed here are really very basic security 101 things and the fact that they existed gives me no confidence that the developers had the experience to make good decision early on about technology. I will note, however, that things seem to have moved on a long way since them and the security issues are now not a problem.

It’s an interesting article and whilst not all the comments here are friendly I think if you interpret the words in a different way than the title suggests then it’s very useful.

Good post but as some have answered already, do the person was good at designing the DB? About RDBS, how come OLAP and OLTP two seperate systems while there should not have anydifference. With social networks that are combined into businesses as they analyse consumers behavious, then it turns into billions of data to manipulate, RDBS can’t make it properly. Any thought about IMDBS with the likes of HANA.

Thanks for the post!

I know you addressed this, but still, this looks like a job for… A graph database!

The data you’re working with just fits the graph model so perfectly. I admit there’s not a lot of production ready software out there, but it may be/have been worthwhile investigating something from the RDF ecosystem. Virtuoso and some similar triple stores are production ready (or close) today.

Very interesting piece.

It would be extremely beneficial to have a reply from MongoDB people (as in MongoDB Inc), to argument and maybe suggest an implementation or set of best practices in designing a system to support the case presented here.

I second Mike Leonard on the issue that documents should be designed properly for what concerns you use case.

Adding 2 cents, I learned a lot when I heard martin fowler speaking of NoSQL databases as Aggregate oriented databases http://martinfowler.com/bliki/AggregateOrientedDatabase.html and

, where the Aggregate is designed to respect closely the transaction boundaries.

I humbly disagree with this paragraph “Furthermore, in a relational store, with the data fully normalized, it would be a seven-table join to get everything out.”

Joining comments and likes together is like messing apples and pears.

For the sake of simplicity, let’s say one user has just one friend and he has posted only once, and this post has 100 comments and 100 likes. If you join all 7 tables you end up with 10.000 rows (cartesian product of comments and likes) which is bad not only performance wise but also because you need a postprocess to get unique comments and unique likes. In this case you may want to issue 2 queries at a minimum: one for comments and one for likes. It will probably be faster and simpler.

Hi Sarah,

Thanks for this great article.

I read it on a precise moment I’m starting a new project and deciding how to store the data.

I have quite some experience with mongo and was tempted to use it, but I started seeing it’s drawbacks and your piece just enlightened my thoughts.

Guess I’ll have to improve my psql. Thanks again

“This is patently false. There is always schema. The schema is the data itself. ” – what holy bullshit. Data is data and schema is schema…this is truly the most dumb statement of this discussion